Duplicate Meta Tags Due to Paginated Pages Index

I recently identified the SCG blog posts had duplicate meta tags issues. It is identified by the technical SEO discussion with the SEO industry person about the topic “Pagination”. During the discussion I said, the paginated pages should be indexed. After my discussion, I deep dive on the topic and analysed the paginated pros and cons.

The SCG blog posts duplicate meta tags issues is technical problem, it is occurred because paginated pages were indexable. Mostly the paginated pages essentially add no value despite being indexed on SERP.

Pagination is a clever way of distributing content across pages and users may even click those pages to discover the content.However, on SERP they bear the same meta tags and context as they did on the level 1 page.Consequently, issues like keyword cannibalization, duplicate content cluster, and index bloat arise.

Aiming to improve the bot experience on the site blog posts, I non-indexed and followed all the paginated pages encountered.

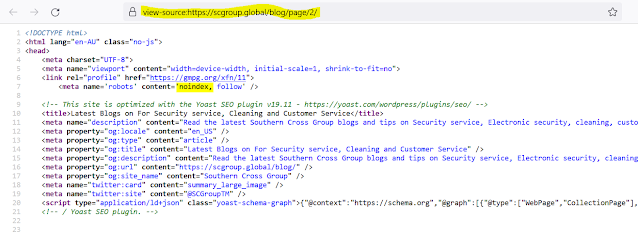

Before No-Index: Here we can see "Meta Robots" and "Canonical" tags in the below screenshot.

It is not a big trouble in my case. But “Pagination means big trouble for big sites, especially for a site with 50K+ pages indexed on Google SERP.”

Paginated pages on SERP add no value. All the preceding level pages in pagination carry the same meta tags thus exacerbating keyword cannibalization incidences.

To prevent this we added a non-index, and follow to all the paginated blog pages. This will help us with two things:

- Preserve the precious crawl budget.

- Prevent the occurrence of keyword cannibalization.

As a result, I managed to get as much as unique meta tags for all the SCG pages.

How will this help?

- The amount of perceived duplicate content that Search Engine bots would infer dramatically lessens. (I.e., issues like keyword cannibalization and index bloat will minimize to a great extent).

- This will help SCG blog posts with crawl budget optimization as well.

- Instead of crawling paginated pages with similar content in terms of excerpts, Google would rather crawl the pages with more value and should remain fresher in Google SERPs.

Another perspective of an example:

If I have 5000 products, I can’t put them all on one page, I will put them on five pages, or 1000 pages, or whatever.

So that’s kind of where pagination comes in. And from my point of view, it’s important that we can recognize this kind of pagination and essentially index those individual pages.

Usually there are a pros and cons:

Pros: 1.Internal links to our other pieces of content, 2.Increase Crawl depth (crawling and rendering in particular), 3.Dwell time and etc..,

Cons: 1.Lot of new URLs, 2.Duplicate Meta Tags, 3.Keyword Cannibalization issue.

So in particular, one recommendation is to have separate URLs for each page so that we can still go to the individual pages, to have links to those individual pages. And for the user if they scroll down to the bottom then it’s fine to load the next page kind of thing.

Should all paginated pages be indexed? Yes.

Kind of like I mentioned, if there is something on there that we want to have known by Google – which could be a link to a different product, which could be a part of a longer piece of content then it has to be indexed.

Comments

Post a Comment